Algorithms & Love: Dancing with the Creative Tension of Our Times

[Below is an adapted transcript of a talk delivered by ServiceSpace founder, Nipun Mehta, at the 2017 Wisdom Together conference in Munich, Germany]

Today I want to talk about this very interesting tension between algorithms and love. While algorithms are a set of recipes, or a set of instructions, that help us filter data on the outside, it is our intuition that helps us with a lot of data on the inside. Now, that intrinsic data doesn't have the same kind of boundaries as extrinsic data, so holding theme together makes for a very interesting tension -- that I want to explore today.

Many years ago, I saw a movie called “Minority Report”. Tom Cruise opens in his dashing style and makes an arrest: “Sir you're under arrest.” The shocked arrestee asks, “What for?” “For a crime you're about to commit.”

Many years ago, I saw a movie called “Minority Report”. Tom Cruise opens in his dashing style and makes an arrest: “Sir you're under arrest.” The shocked arrestee asks, “What for?” “For a crime you're about to commit.”

With a cursory glance, that feels like, “Wow! Imagine a world without crime.” Very sci-fi and futuristic. Except that future is already here, with hundreds of cities around the world doing this kind of predictive policing. In real-life, though, it’s not as much of a "Wow" as it was on the movie screen.

Before I explore that further, I'd like to share a bit more context about about my personal journey -- because it led me to both algorithms and love.

Like most of us, my journey started with this unrelenting pursuit of success. In college, I studied computer science. I've loved to code, since seventh grade. Even to this day, if I’m at home, I’m coding. Computers fascinated me, but for me that fascination was initially driven by extrinsic motivation of success. My first and only job was at Sun Microsystems, which is now part of Oracle. (Incidentally I used to work at the same campus which is now the Facebook headquarters.) I was optimizing C++ compilers and for a while, that mindset subsumed my life for a few years. For instance, if I had to go from point A to point B, I would automatically analyze the shortest path -- which is a 45 degree line, of course. So I would never go from this street and take a left -- I would always aim for routes that were the closet fit to a 45 degree path.

Soon, though, I realized that extrinsic rewards weren’t satisfying me. It wasn’t enough. So I explored other avenues, and one of them was service. Helping other people and the community, without any personal agenda. Right in the heart of Silicon Valley, we started an organization called ServiceSpace that initially built websites for nonprofits and eventually manifested a wide array of other projects. As far as motivations go, this kind of service for me was partly about extrinsic impact and partly about intrinsic joy. I enjoyed it more, but it got me curious about what lay further on the intrinsic end of the spectrum.

Soon, though, I realized that extrinsic rewards weren’t satisfying me. It wasn’t enough. So I explored other avenues, and one of them was service. Helping other people and the community, without any personal agenda. Right in the heart of Silicon Valley, we started an organization called ServiceSpace that initially built websites for nonprofits and eventually manifested a wide array of other projects. As far as motivations go, this kind of service for me was partly about extrinsic impact and partly about intrinsic joy. I enjoyed it more, but it got me curious about what lay further on the intrinsic end of the spectrum.

That’s what got me to pursue stillness. I noticed that the quieter my mind became, the more connected I felt -- with all life. I wasn’t creating those ties, but rather just falling into the web of our existing interconnections. In 2005, my wife and I to embarked on a walking pilgrimage to push ourselves a bit more in this direction. Since Gandhi inspired us, we decided to start at the Gandhi Ashram in India. We walked south, doing small acts of service along the way, trying to purify our minds and hearts to find a greater stillness. We ate whatever food was offered, slept wherever place was offered, and survived entirely on the kindness of strangers. It was a profound and humbling experience.

What I started noticing was the growing tension between my training of parsing the material world with algorithms and my stumbling into this intrinsic sense of love borne of a quiet mind. How do these things come together?

That became an organizing question in my life, and I think it's a question that we're all needing to navigate in our current era.

Going back to Minority Report, predictive policing is no longer a distant fantasy. A set of algorithms detect “criminals” before they commit the crimes. And it's not just limited to crime. This is how we give out bank loans, this is how we hire public school teachers, this is how we scout sporting teams and talent.

Algorithms are everywhere. And it really seduces us with the question, “Can we predict everything?” Underneath, it really begs the question: Can we control everything?

Google certainly thinks so. Ray Kurzweil, the engineering director at Google, recently made the headlines with the claim: “Our company is going to be able to know you better than your spouse.” The logic follows like this: "Google will know the answer to your question before you have asked it. It will have read every email you've written, every document, every idle thought you've tapped into a search engine box, it will know you better than even your intimate partner, better perhaps than even yourself."

This isn’t just about Google. It’s the default narrative of the tech industry at large.

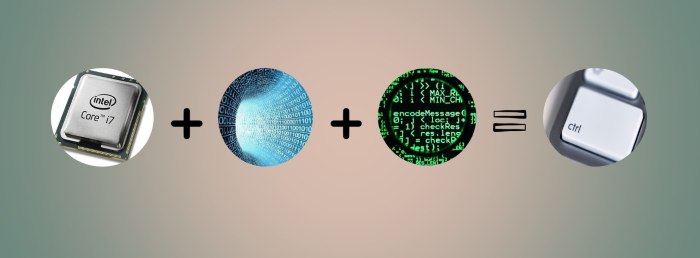

The formula for this innovation is simple.

First, you build a lot of computing power. As Moore’s law has accurately predicted, we have an ample amount that is increasing exponentially. Experts predict that, in 15 years, a single chip will have more processing power than all the brains of all of humanity put together. Maybe even in 10 years. Computers that used to take entire rooms, just a few decades ago, are now in all our pockets, and if that continues exponentially, we can just imagine where that goes.

Second, you add data -- big data -- to that processing power. Companies are constantly extracting all kinds of data from us. Your cellular provider, for example, may actually know whether you rushed to get here or whether you were driving slow. You didn't necessarily give them that information but based on how fast your phone traveled, it knows. By itself, that sounds benign but when you add that bit of information to all kinds of other data on your personal preferences, you can start to imagine that a computer actually may know us better than we know ourselves. In fact, a Facebook study showed that its algorithms needed a set of just 10 likes to know a person better than their work colleagues, and with a set of 300 likes, the Facebook algorithm predicted your opinions and desires better than your husband or wife!

Lastly, you apply algorithms to the processing power and big data.

Algorithms are a set of instructions. The Uber app you might've used to get here is a set of algorithms. Same with your GPS. Traffic lights are operated by algorithms. The markets we transact in, the movies we watch, the tennis matches we analyze are all increasingly run by algorithms. Everything around us is embedded in these algorithms.

The latest development is self-learning algorithms that teach themselves, and they are powering early versions of AI (Artificial Intelligence), that do much more than operate the vending machine. We already know AI beats us at chess, but also at more intuitive games like poker; it can predict heart attacks better than doctors; it can drive our cars -- and yes, algorithms have arguably created music indistinguishable from Bach! Naturally, it is expected to be a multi trillion dollar industry in the coming years. Sundar Pichai, the CEO of Google recently spoke of their strategic shift, "We are evolving from mobile-first world to AI-first world." All undergirded by algorithms.

When we combine computing power with big data with algorithms, what we end up with is control. That control can be used in many ways.

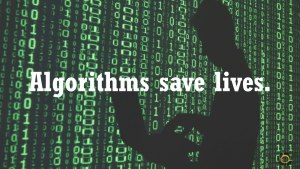

Certainly, an argument can be made that algorithms save lives. They certainly do -- there's no point in denying that. If a group of us are tasked with creating two million prescriptions, research shows that we'll make 20,000 errors. Algorithms make zero errors. We can go down the list of examples. Peter Domingos, who wrote the book on algorithms, says, “If every algorithm suddenly stopped working, it would be the end of the world as we know it.” We can't get away from it. They are embedded into the fabric of our daily lives.

Certainly, an argument can be made that algorithms save lives. They certainly do -- there's no point in denying that. If a group of us are tasked with creating two million prescriptions, research shows that we'll make 20,000 errors. Algorithms make zero errors. We can go down the list of examples. Peter Domingos, who wrote the book on algorithms, says, “If every algorithm suddenly stopped working, it would be the end of the world as we know it.” We can't get away from it. They are embedded into the fabric of our daily lives.

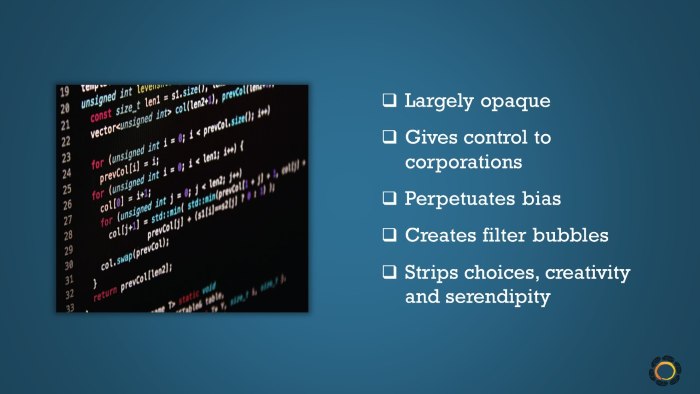

On the flip side, though, algorithms also have their share of weaknesses. The biggest failing being that algorithms are largely opaque to users. We don’t have public discourse on algorithms. It’s a black box primarily controlled by corporations whose overriding interest is to maximize profit and turn users into consumers. That can be problematic to say the least. Algorithms perpetuate bias, the implicit existing biases of their creators. They create filter bubbles, where we reinforce an echo chamber instead of exposing ourselves to a diverse spectrum of ideas. The recent “fake news” or “alternative facts” phenomenon is emblematic of the rise of such filter bubbles. And algorithms significantly strip us of creativity, of our awareness of and access to choices, and ultimately to serendipity. If everything is formulaic, where is the room for serendipity?

In “Weapons of Math Destruction”, Cathy O’Neil makes a compelling case that algorithms unfairly punish the underprivileged. A lot can be said, but she sums it up perfectly in one short sentence: “Algorithms simply automate the status quo.” Keeping things as they are.

Let’s consider the status quo for a moment. As of a few months ago, eight men owned more financial wealth than the bottom 3.6 billion people in the world. This is another status quo -- disconnection. More people die of suicide than wars and natural disasters put together. Here’s a photo of everybody taking a selfie with Hillary Clinton when she was running for president. No one is looking at her. We can't just blindly criticize the younger generation, because we are the one who have designed these devices, we’re the one who have marketed solutions to them that prioritize cheap friendships, where people are reduced to pixels to be grabbed for my Facebook wall.

Let’s consider the status quo for a moment. As of a few months ago, eight men owned more financial wealth than the bottom 3.6 billion people in the world. This is another status quo -- disconnection. More people die of suicide than wars and natural disasters put together. Here’s a photo of everybody taking a selfie with Hillary Clinton when she was running for president. No one is looking at her. We can't just blindly criticize the younger generation, because we are the one who have designed these devices, we’re the one who have marketed solutions to them that prioritize cheap friendships, where people are reduced to pixels to be grabbed for my Facebook wall.

This is the status quo. And this quote that follows also represents the status quo -- Reid Hoffman, the CEO of LinkedIn, shared these words with The Wall Street Journal few years ago: “Social networks do best when they tap into one of the seven deadly sins. Facebook is ego. Zynga is sloth. LinkedIn [his own company] is greed.” Here’s a guy who succeeded at the market economy and he's saying our organizing principles are the seven deadly sins.

The question we hold, that I think all of us are holding is -- what about the seven viral virtues? And if that's not coming out of the market, where is that going to come out of?

If this is the status quo that we’re propagating, is it a surprise to see headlines like this: “A beauty contest was judged by AI artificial intelligence and the robots didn't really like dark skin.” You might shrug it off and say, “Okay maybe that's just one-off case.” Well, consider the case of MIT grad student Joy Buolamwini. She was working with facial analysis software when she noticed a problem: the software didn't detect her face -- because the people who coded the algorithm hadn't taught it to identify a broad range of skin tones and facial structures. Now we can start saying, “Whoa, wait a second, that doesn't sound right!” Joy actually started an effort, Coding for Rights, which is now addressing some of these issues but it raises a lot of red flags for things that typically fly under the radar.

At the end of the day, algorithms are really only as good as the data we give them.

IBM did this Twitter experiment with a chatbot, an artificially intelligent machine that would automatically respond to people’s tweets. What happened? In a couple hours they had to shut it down. This is the Tay bot. In just a matter of couple hours this AI machine was spewing out stuff like this: “Bush did 9/11. Hitler would have done an even better job.” I don’t even want to read this next tweet. Racist, xenophobic. They shut it down in a matter of hours. It didn’t work.

If we look at that failure, we can innovate in two directions.

One approach is -- big data to bigger data. If we had more information, our technology would be smarter.

And how far can bigger data go? Judging by today's corporations, pretty far. Facebook tracks the items you hover over when you're just surfing. Delete a line in your "Compose" window and Gmail tracks that. The data we can extract, the data we handily give up for the sake of short-term convenience or simply out of ignorance, is of monumental proportions. But we extract all this data to what end? Here’s a cover of Time Magazine: “Can Google Solve Death?” Yes, billions are being spent on it. “But wait,” you might wonder, “what does that even mean?” Well, in some schools of thought, aging is seen as a disease that our bodies are incapable of solving. As soon as we create powerful machines, with a lot more data and better algorithms, we’ll be able to live forever. At least, that’s the dream. And of course, you can imagine that there would be a great market response for this, right?

And how far can bigger data go? Judging by today's corporations, pretty far. Facebook tracks the items you hover over when you're just surfing. Delete a line in your "Compose" window and Gmail tracks that. The data we can extract, the data we handily give up for the sake of short-term convenience or simply out of ignorance, is of monumental proportions. But we extract all this data to what end? Here’s a cover of Time Magazine: “Can Google Solve Death?” Yes, billions are being spent on it. “But wait,” you might wonder, “what does that even mean?” Well, in some schools of thought, aging is seen as a disease that our bodies are incapable of solving. As soon as we create powerful machines, with a lot more data and better algorithms, we’ll be able to live forever. At least, that’s the dream. And of course, you can imagine that there would be a great market response for this, right?

Today, I want to ask an even harder question. I want to ask a question that invites us to unravel some of the unconscious assumptions that we might have baked into our algorithms. And that question is: Can we solve love and forgiveness? Or is that even something to be solved?

To start, let's watch this video of a true story.

Here you have a man who committed 48 separate murders. Seemingly, everyone hates him and he hates everyone. Stalemate. In court, the father of one of the victims says to the convicted man, “Sir people like you make it very difficult for me to practice my values, but I want you to know you are forgiven.” The cold-faced, murderer begins to sob.

What is the predictive algorithm for that? How many conditions does it take for that moment to occur? And are we arrogant enough to think we can map all the conditions, across so many generations, that resulted in that moment of inner transformation? That transformation, in the father of the victim and the murderer -- what is the algorithm for that?

Earlier, we spoke about two potential responses when algorithms fail us. One is to go from big data to even bigger data. That’s the current dominant paradigm approach.

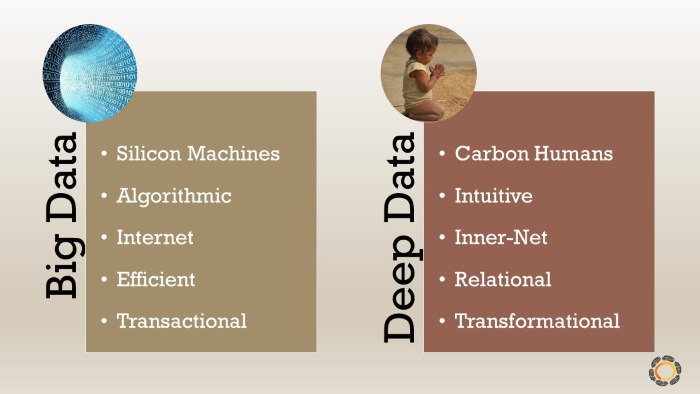

The second approach, however, that I'd like to explore today, is to shift from Big Data to Deep Data.

Big data is understood by silicon machines whereas deep data is processed by carbon-based life, humans. Big data is algorithmic, deep data is intuitive. Big data powers today’s Internet, but it is deep data that has powered the web of our connection -- the innernet -- for millennia.

Big data is very efficient; if you can do things in five steps, can we learn how to do it in three? But deep data is resilient, and it is resilient because it is relational. It facilitates a shift from transaction to transformation. The transformation that is borne of serendipity, of profoundly interconnected deep data that subtly meanders past the neatly drawn boundaries of our apparent identities. Our human bodies are the very apparatus, with the native app in our hearts, that gives us access to this profound intelligence. We simply need to activate it.

The question that I hold isn’t one versus another, but really, how can we marry the two?

We all know that marriage can be messy, but it's absolutely worth the price, right? So how do we marry big data, which clearly has a lot of strengths, and this deep data that we're already swimming in? That's a great possibility to explore.

Gary Kasparov, at the age of 22, was the best chess player in the world and he stayed that way for the most of the next twenty years when he retired. In 1997, he played IBM's Deep Blue in a epic “humans versus machines” battle -- and lost. Everyone began waving the end-of-times flag: “Machines are taking over!”

At the end of the hoopla, though, Garry Kasparov made a rather interesting remark, “Oh wait a second, I didn't realize that Deep Blue had access to every single chess move that every single human being had ever made!” He continued, “Well if I had access to that, I would win.” And so they said, "Let's try it." And they tried it, and he was right! Still is. Today, the best chess in the world is not played by humans, but neither it is played by computers. The best chess in the world is played by a hybrid, what Kasparov calls Centaur Chess or freestyle chess, where humans play aided by computers. Centaur is an allusion to the Greek mythic figure that is half-human half-horse.

The overarching idea is that there are multiple forms of intelligence, some of which play into a computer's strengths and some of which play into the strengths of our carbon-based apparatus. So we must innovate solutions that leverage these multiple forms of intelligence.

And that really begs the question: what is an intelligence that is uniquely human?

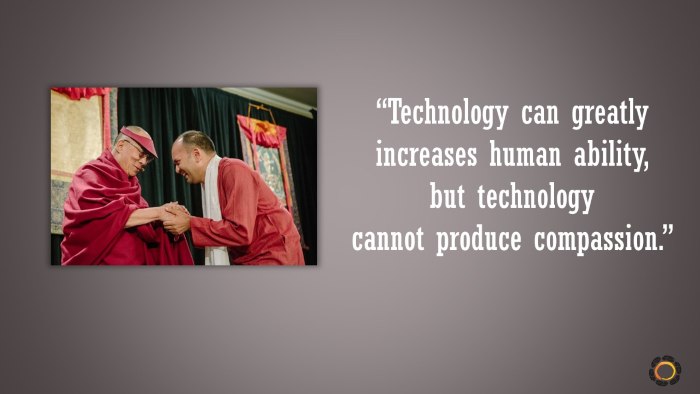

What is an example of something that only humans might be able to do? A few years ago, I had the occasion to receive a blessing from the Dalai Lama. On that day, he shared a beautiful quote that has stayed with me, “Technology can greatly increase human ability but technology cannot produce compassion.” The Dalai Lama knows a thing or two about compassion, so he's not just talking about compassion as an emotion or compassion as a sympathetic response when you see someone suffering. He's not even talking about it as empathy. He's talking about something much, much deeper, something that’s way more fundamental to our existence, and he's citing it as a human trait. And maybe not just confined to humans, but a unique trait for all life.

If we follow that further, we can ask the question: How do we marry the intelligence of algorithms and compassion?

In ServiceSpace, we began by innovating around these different kinds of intelligence. Back in 1999, we started to build websites for nonprofits and gradually blossomed into an ecosystem of all kinds of different projects. We have DailyGood, a portal for just good news, KarmaTube for inspiring videos, and many more. On KindSpring, we started a game of kindness that allows for small acts of kindness to spread. What I want to talk about today, though, is this example of Karma Kitchen, because it's a great example of how big and deep data can come together.

As context, Karma Kitchen is a pop-up restaurant experiment. A team of volunteers take over a regular restaurant, and if you walk into this restaurant, you get a meal like you would anywhere else -- except that at the end of the meal, your check reads zero. Your bill is zero because someone before you paid for you and you now get a chance to pay forward for someone after you. Most people think, “Oh that can't work in real life! Don’t you know the first rule of economics -- that people aim to maximize self-interest? People won’t pay.” We said, “Look, if no one pays, we'll stop after the first week. Let’s just see.” It was a one-time experiment, but it ended up continuing week after week after week after week -- tens of thousands of people and we’re like, “Wow, we're really onto something!” Today, it's been experimented in 24 locations around the world and it really starts to reshape our collective narrative of human possibility. UC Berkeley’s actually done research on it and the title of their paper was, “Paying More When Paying For Others.”

When people are moved by love, they tend to respond with even greater love.

If we peel back the layers you notice a secret sauce: it's all run by volunteers. For instance, at our Berkeley location, a volunteer crew of a dozen or so people operates the whole show. The person who greets you at the door has taken their Sunday off to serve you, the person who waits your tables is volunteering, the person who is plating your food in the back is just there to serve you, the person who is bussing your tables is a volunteer, the person who is doing the dishes is a volunteer. At some point or another, most diners stop and say to themselves, “Wow, all these people are just here to serve me just so I can have an experience in generosity?” It completely moves their hearts.

If you have to organize ten volunteers, you can do it in a certain way, but then if you go from ten to a hundred to a thousand, to let's say ten thousand -- it’s way too much overhead. Typically, we hire a few staff to manage it. As soon as you have a few staff, you've got to have a fundraising strategy. Then, as that scales up, you start to look at people like numbers. Inevitably, it starts to strip away this raw organic energy that is present in every interaction of Karma Kitchen. The fundamental reason why we're able to retain Karma Kitchen as a volunteer-run operation, as this powerful container of compassion’s deep data, is precisely because of algorithms. We’ve been able to create technology and additional context that allows us to reduce our organizing overhead to near zero, which then allows us the freedom to offer everything purely as a labor of love.

If you have to organize ten volunteers, you can do it in a certain way, but then if you go from ten to a hundred to a thousand, to let's say ten thousand -- it’s way too much overhead. Typically, we hire a few staff to manage it. As soon as you have a few staff, you've got to have a fundraising strategy. Then, as that scales up, you start to look at people like numbers. Inevitably, it starts to strip away this raw organic energy that is present in every interaction of Karma Kitchen. The fundamental reason why we're able to retain Karma Kitchen as a volunteer-run operation, as this powerful container of compassion’s deep data, is precisely because of algorithms. We’ve been able to create technology and additional context that allows us to reduce our organizing overhead to near zero, which then allows us the freedom to offer everything purely as a labor of love.

In such a context, transformation happens naturally. Several years ago, I remember I was volunteering on a Sunday, at Karma Kitchen Berkeley. At that time, Karma Kitchen was the top-rated restaurant in the city on Yelp. Imagine that -- a volunteer-run outfit, where folks get a 45-minute orientation before they start, no restaurant experience required, and yet this place is people’s favorite. Volunteers just fall into this organic flow and coordination -- it’s an incredible testimony to the power of love, and the power of deep data perhaps.

So, one of those days, a guy walks in. A well-built fellow, with very visible tattoos. He comes in and we explain that this restaurant is an experiment in generosity, "Would you like to have a table?” He says yes. “Great, how about this one here?” “No, actually, can I have that one in the back?” And so he's seated at the back where he quietly reads the menu, is served his food, receives his bill and then leaves without speaking to anyone. Later he explained, "I wanted the seat in the corner so that you wouldn't see me tearing up. See, I used to steal from this place years ago. I used to be a food thief because our family didn't have enough to eat. I would wait outside during their food deliveries -- as soon as they go into the restaurant to restock, I would go in to steal the food. And to come into that same place, and to be treated with this kind of love and respect, I am just so moved." He dropped in the next week and insisted, "I know you don't take drop-ins but you have to let me wash the dishes." So we did dishes together. It was his way of saying thank-you and paying it forward.

That is transformation. That is not predictable, it is not formulaic, you cannot put it in an algorithm. It emerges from a field of deep relationships. It emerges in a field when all its actors are behaving with compassion. This is not just limited to Karma Kitchen -- it happens everywhere. It's actually all around us, but all too often we gloss over it.

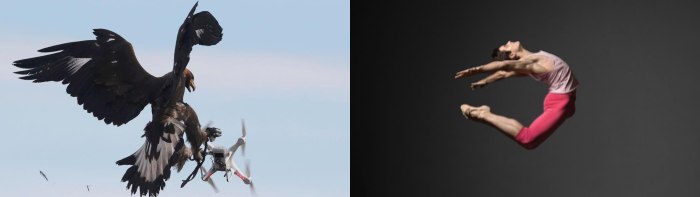

So big data and deep data leaves us with a choice. Are we going to embrace this creative tension between the technological revolution and our inner revolution? If we don't, what we'll start seeing is the kind of the fight that drones and eagles are engaged in right now. Eagles are being trained by France’s anti-terrorism unit and for now, they’re winning but drones are only going to get smarter and bigger and stronger. Do we really want this fight? Or can we evolve in a symbiotic relationship that allows us to dance? Sure, there are opposing forces but can we can hold them together with grace and elegance?

I want to end with a story of a man who invites us to step into that space of dance. A few years ago, at New York’s 137th Street Subway station, Wesley Auttrey was about to take a routine train with his two daughters. All of a sudden, a fellow passenger -- a stranger -- goes into a seizure and falls down on the tracks. Wesley notices that a train is coming and the man’s tremors won’t allow him to climb back up. So he jumps on the tracks, pins him down and lies on top of him as the subway train crosses over him. Before the train conductor realizes this, all but two bogeys had already crossed over the fallen man and Wesley. The hat on Wesley’s head had grease on it.

Wesley’s intelligence isn’t predictable. He had two daughters with him, at that very train station, and he was still willing to sacrifice his own life for a complete stranger on the tracks. That is not a result of something that is formulaic, but rather a moment of beauty that emerges from a complex set of interconnections in his inner ecology. How do we learn to honor that deep intelligence that is already native in all of us? How do we learn to marry it with the awesome computing power, the ginormous big data, and the sophisticated algorithms that are now available to us? Most importantly, how do we make sure that we lead with this kind of love? How do we make sure that instead of trying to dominate nature, we're actually in concert with its emergence?

Wesley’s intelligence isn’t predictable. He had two daughters with him, at that very train station, and he was still willing to sacrifice his own life for a complete stranger on the tracks. That is not a result of something that is formulaic, but rather a moment of beauty that emerges from a complex set of interconnections in his inner ecology. How do we learn to honor that deep intelligence that is already native in all of us? How do we learn to marry it with the awesome computing power, the ginormous big data, and the sophisticated algorithms that are now available to us? Most importantly, how do we make sure that we lead with this kind of love? How do we make sure that instead of trying to dominate nature, we're actually in concert with its emergence?

I think that's the invitation -- to hold all these questions, and craft a new narrative.

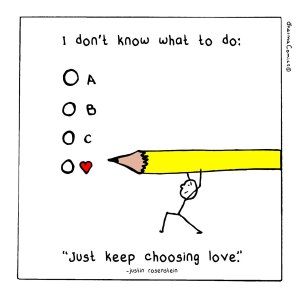

In the end, if we ever get stuck between choice A B C or D, I hope our algorithms always point us to love.

Nipun Mehta is the founder of ServiceSpace.org, a nonprofit that works at the intersection of gift-economy, technology and volunteerism.

On Aug 18, 2017 Virginia Reeves wrote:

Really interesting look at humanity, machines, and how we interact. Thanks for sharing good insights and lots of food for thought.

Post Your Reply